Someone on X said, “With AI, you can do the work of ten engineers”.

And sure, it sounds exciting. But let me ask you this: “Have you ever worked on a real-life product and felt like writing code was the only bottleneck?” Probably not.

It’s tempting to believe AI is a magic multiplier. And in some ways, it is. But if you zoom out and look at the full picture what software engineers actually do, it becomes clearer: AI is a great helper in some parts of the job, and a questionable assistant in others.

Let’s break it down.

What do engineers actually spend time on?

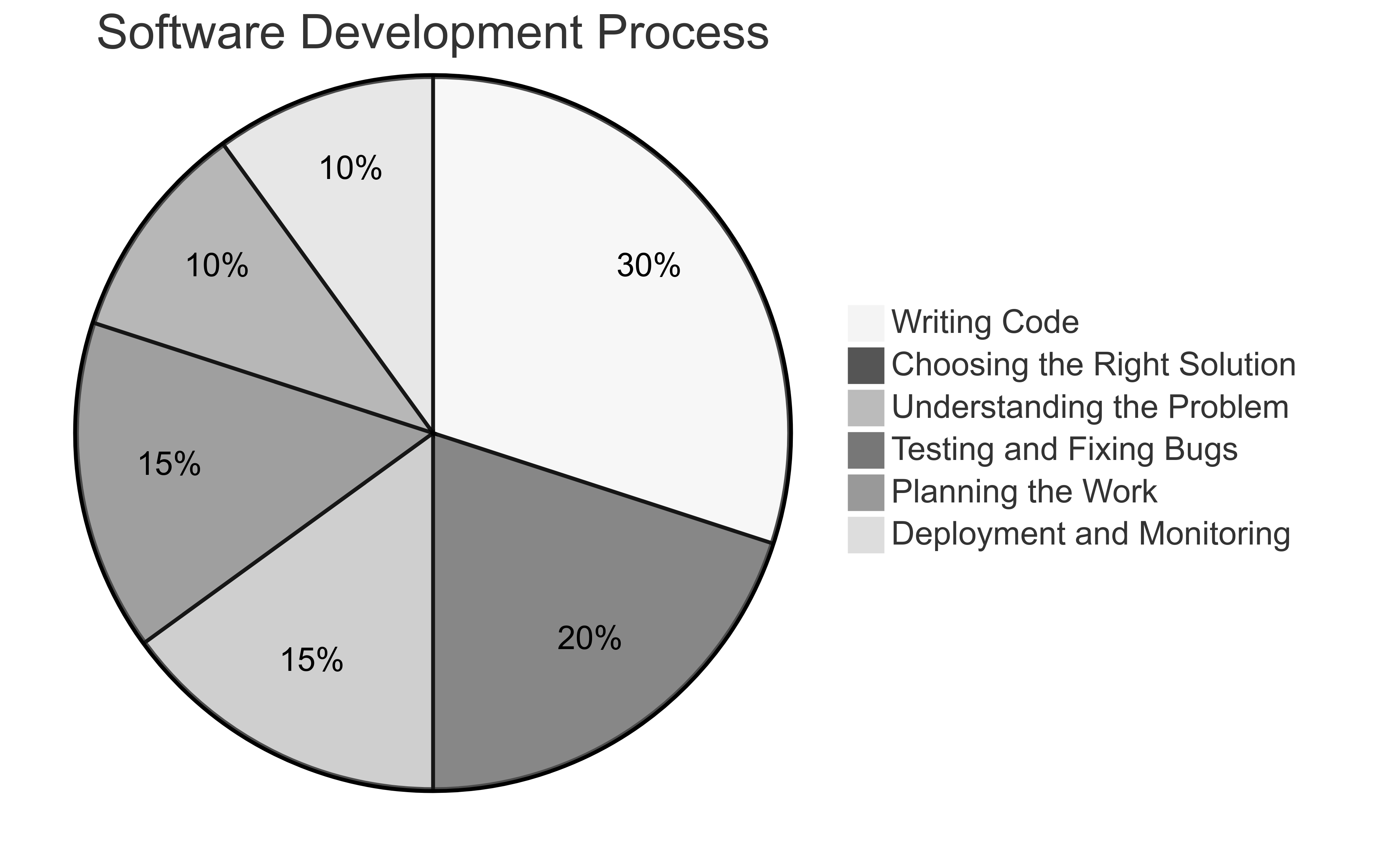

We often think the job of engineering as “writing code”. But that’s just one slice of the pie. Here's a simplified view of what software engineers really spend time on in a typical project:

- Understanding the Problem (15%): Talking to people, asking questions, and clearly defining the problem.

- Choosing the Right Solution (20%): Considering current systems, trade-offs, and deciding the best solution that fits with the current context.

- Planning the Work (10%): Writing clear, actionable implementation plans and system design documents.

- Writing Code (30%): Writing code that’s clean, readable, and easy to maintain.

- Testing and Fixing Bugs (15%): Making sure everything works as expected and quickly fixing issues.

- Deployment and Monitoring (10%): Launching the changes safely and closely monitoring its performance.

Now let’s ask the real question: in which of these can AI truly help? And where does it struggle?

Where AI makes a real difference

Let’s start with the good news.

Writing Code

AI really shines when it comes to writing code. Tools like GitHub Copilot, ChatGPT, Cursor, or Claude Code are great at handling the boring stuff such as setting up boilerplate, creating new files, or writing tests. If you already know what you want to build, it can seriously speed things up.

But, and this is important, it’s a bit like working with a junior engineer. It might get the job done, but you still need to double-check everything. Does the code make sense for your system? Is it clean and maintainable? AI won’t catch those things unless you guide it.

So yes, it helps you move faster, but it also means you’ll spend time reviewing and refining.

A big part of coding is understanding the code that’s already there. For years, reading and making sense of existing code has been one of the hardest things, especially for newer engineers.

Now, AI can help speed up that process. It can describe what a piece of code is doing, show you where it's being used, and help you understand how different parts fit together.

But don’t skip the hard parts entirely. The struggle of figuring things out on your own is where real learning happens. AI can support you, but to truly get better and own your craft, you still need to do the work of understanding it yourself.

Planning and Docs

Trying to write a design doc or outline a launch plan from a blank page is never fun. That’s when AI really can help. It’s like someone tossing you a rough first draft so you’re not starting from zero.

It can also help you get organized, breaking down big ideas into smaller chunks, building out timelines, or pulling tasks out of messy Slack threads.

The trick? Be specific. The clearer you are with what you need, the better AI performs.

Testing and Automation

It’s good at writing unit tests based on your code and simulating how users might interact with your app, especially if it knows your API or UI setup. So, most of the testing can be covered by AI, including Unit Testing, Integration Testing, or even End-to-End testing.

It can also help with bug fixing. If you give it enough details, it can suggest where things might be breaking or possible fixes. Give it enough context, and it’ll help trace the issue or throw out a few possible fixes. But don’t expect magic, it still needs direction. It is still a junior teammate: helpful, fast, but not always right unless you steer it properly.

Deployment and Monitoring

AI is starting to become pretty handy when it comes to keeping an eye on your systems. It can go through logs, spot things that look off, and even flag potential outages before they happen, just by learning from past incidents.

It’s not here to replace your observability tools, but it’s like having an extra set of eyes on duty all the time. A helpful backup, not the main monitor.

Where AI falls short

Now let’s talk about the areas where AI isn’t ready, or maybe never will be.

Choosing the Right Solution

Should you take a shortcut or build something solid? Split the service or keep it together? AI might suggest some options, but it doesn’t really know your system, your past decisions, or what’s coming next.

These aren’t just coding questions, they need real judgment. And AI doesn’t have that. It can’t feel trade-offs or understand the people and goals behind the work. That’s why your experience still matters.

Understanding the Problem

It’s easy to miss how much thinking goes into understanding a problem. When you get a new task or bug, your brain does a lot behind the scenes, asking questions, noticing when something feels off, and piecing things together.

AI can’t do that. It doesn’t know how to dig deeper or question what it sees. At best, it copies what it’s seen before. But truly making sense of a problem? That’s still on you.

Designing for the Long Term

AI can generate working code, sure. But will it be maintainable? Will it respect system boundaries, follow design patterns, or play nicely with the rest of your stack? Often, no.

AI doesn’t think in trade-offs. It doesn’t care if your service is hard to test or has a leaky abstraction. You do.

Use AI where it helps, own what matters

So, how do you cross the balance?

- Use AI to accelerate the known: Tasks you’ve done before, patterns that are repeated, these are perfect for AI assistance.

- Don’t outsource judgment: You still need to decide what to build, how to build it, and why. That’s the core of your value as an engineer.

- Review everything: AI can give you speed, but you give it meaning. Review its output like you would review a new junior teammate’s code.

- Think in loops, not handoffs: AI is best when there’s a tight feedback loop. Prompt → result → edit → re-prompt. Stay in the loop.

- Invest in context clarity: Whether for humans or AI, better outcomes come from better input. Make your problem, goals, and constraints explicit.

Use AI to go faster, but don’t forget to look up from the keyboard and ask: Are we building the right thing? That answer still comes from you.

Want something practical? Here’s a simple decision matrix:

| Task Type | AI-First | Human-Led |

|---|---|---|

| Write a unit test | ✅ Yes | ❌ |

| Design a system | ❌ | ✅ Yes |

| Update docs from chat | ✅ Yes | ❌ |

| Decide on architecture | ❌ | ✅ Yes |

| Refactor for clarity | ⚠️ Maybe | ✅ Yes |

| Write your opinion | … | … |

| Write your opinion | … | … |

| Write your opinion | … | … |